The Arc Project Update #2 – Tell The Model Workshop, part 1

This blog is part 1 of a workshop we held in December 2021, with some Skåne screenwriters and AI researchers to experiment with a fascinating emerging technology.

By Hussain Currimbhoy

As an aspiring screenwriter myself, I began to study how movies are written. To do that I had to understand how my scripts compare to films that have been successfully made already. Looking at genre films specifically, I wondered if there was a way to feed my project into a computer program, an AI model, to identify what makes my story stand out. Or worse. What makes it reductive or cliched.

Our friends at BOOST liked the idea and thought this was an area to explore with real life writers and real AI scientists. At first I felt the AI realm was worlds apart from that of the screenwriter. But when I met Mohanty Sharada, CEO of The AI Crowd, at the Cineglobe Festival in Geneva last year, I realized there are a lot of similarities between our communities. AI developers are also creators. Both are driven by people with dreams, who are imaginative problem solvers and are forceful with their ideas.

Every script is like a prototype. Everything in it, and everyone working on it, is testing out its potential for a market, but also fulfilling a desire to contribute something beneficial to the world. To make a difference. Mohanty and I talked and realized that if we were going to create an AI model that was made for scriptwriters we needed to understand each other’s tools and working methods more deeply.

So we arranged this workshop. We welcomed Måns Thunberg, Andreas Cliément and Filson Ali, all locally based screenwriters with varying levels of experience. But united in their curiosity for what AI could bring to the grinding task of writing a screenplay.

From the AI Crowd we welcomed Florian Laurent, Martin Muller, Nimish Santosh and Sneha Nanavati. We were a young but enthusiastic bunch. Passion, I have learned, always wins.

Firstly we had to learn about an AI model called GPT3, a Generative Pre-trained Transformer 3. This is an autoregressive language model that uses deep learning to produce human-like text. Specializing in word grouping, essentially this model has a deep way of learning from text collected from the internet that it uses to recognize and predict patterns. For example, there is a good chance that in this sentence: “A fireman drove to extinguish the ____ at a house” the missing word is ‘fire’.

Since it was launched GPT3 has been embraced in many ways. It can be implemented as a chat bot, offering answers to some basic questions like ‘What is the average life expectancy of a male in Sweden? Or it can behave like a philosopher, offering inspiring quotes (we will get into that later).

The group began to play with a GPT3 application and just asked silly questions:

“When will I need my next haircut?” The model answers: In about 4 weeks.

The model can offer up different answers every time, which is fine, until we discovered the temperature button.

Florian explained that if you turn that temperature switch up, the model will deliver more random answers to your questions. With a low temperature an AI model will offer deterministic and repetitive answers. This exemplifies the interaction most of us have with AI when we use Siri. Hence our associations with AI can be a bit mundane because AI applications, for the most part, are products. And they want to be liked so they play it safe.

We unanimously wanted the temperature up!

Suddenly if we ask ‘When do I need my next haircut?’ it answers, “You don’t need a haircut”

Randomness. Unexpectedness. That’s where the juice is in a script.

Måns had a fragment of a script idea and explained he was a bit stuck with a detail in the story. We entered this paragraph of his idea into GPT3:

A little kid gets run over by a car during a hit and run. In the car involved, one person is good and one person is bad. They are on the run from something. They dump the dead child somewhere. The ghost of the kid wants to figure out what happens.

Måns wondered what these people driving could have been doing? What were their motivations? Motivation is core to understanding your character. Without it you could have a sticking point.

We ended the paragraph with the prompt: Write the ghost story movie script.

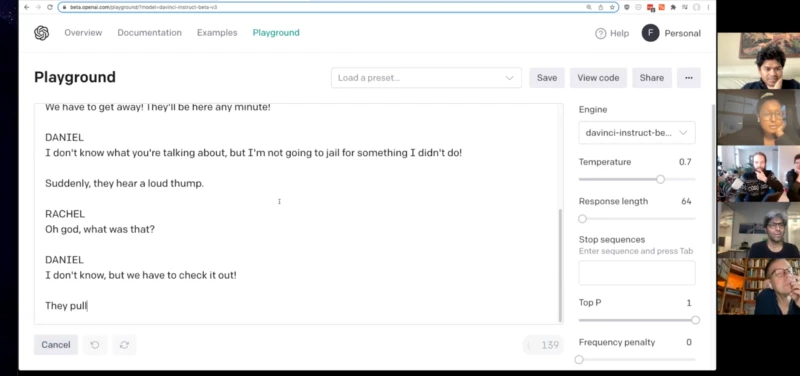

Remarkably, the model produced a half page of formatted film script in a few moments, creating characters with names, (Rachel and Daniel) who are now driving in a car at night.

The AI’s version read like this: the two characters are frantic as they drive along until they hear a ‘thump’ in the road’. They get out and hide the child’s body.|”

We immediately marveled at how the model absorbs the information offered up and produces a coherent narrative. While it did offer a workable next action, there was no build up or atmosphere.

The model just went straight to the story points. In a way this is helpful as we often find ourselves laboring over atmosphere in an attempt to lure the reader to engage with our scripts. But I felt a limitation in the model already. If a human wrote it we could expect some interactions between the characters as they drive. We have drama broadly, but not intimately.

At this point Mohanty wanted to experiment with ‘prompt engineering’, and suggested we ask the model ‘find ideas of what these people were running away from.’

We formulated these as a list. Perhaps Rachel and Daniel were:

coming home drunk from a party

or

running away from a bank robbery.

Then we turned up the temperature. The model replied with several answers but my favorite was: “They were running from a car accident they were involved in”.

As absurd as it was to think of someone escaping a car accident only to have another accident was actually entertainingly unpredictable and a rather over-the-top way of depicting character traits. Such a scene immediately provoked me to think of the backstory of these characters in a way that I doubt any of us would have conjured ourselves.

Inspired, we decided to push further. In order to challenge the model, we had to give it a context it might be less familiar with. Of course: time. We told the model that this story takes place in the year 1600.

Suddenly the reasons for a couple running away ranged from “Rachel was working at an underground club’ to ‘they jumped from an escort’. Our minds were swirling with potential story directions.

We changed the model again to create ‘an instruction’ not sure what this means.

The model came up with ‘A group of angry villagers who are looking for the people who ran over the kid” and “A group of bandits who are also after the cart”

I felt as though I knew this scene from a fairytale or a past film. It was a scene that existed in our cultural subconscious: you can picture an angry mob with torches raised high over their heads, looking for their scapegoat (so to speak). The AI wanted to please us. It was drawing from cultural cues that we know and love. But part of me wanted something more. This nagging feeling returned later in the workshop.

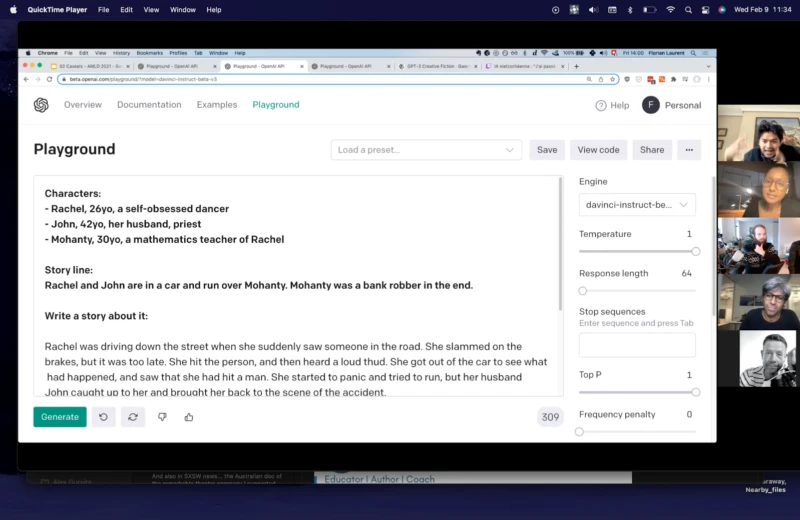

It was apparent that the prompt itself was a key component to making AI operate in ways that would benefit our needs today. So what if we give it parameters to build a storyline to? How about some basic characters.

Rachel 26 year old Dancer

John, 42 year old husband

Mohanty, 30, teacher. (this character outline represented something less common, that would challenge the model. Just a little.)

We fed the model this story line: Rachel and John are in a car and run over Mohanty.

In a few moments the model created this scenario:

Rachel was driving to her dance class when she accidently ran over Mohanty, a teacher who was crossing the street. She was horrified and couldn’t believe she had hit him. Her husband John was with her in the car and he immediately called for help. Mohanty was rushed to hospital but he died from his injuries.

Rachel was devastated and felt like she had killed him. She was arrested and charged with manslaughter. In the end she was sentenced to prison time and had to live with the guilt of killing someone

(Guilt! I like it! Gimme more of that please AI model. I took note: ensure this emotional tension is pursued in future work. The model displayed intuition that morality was core to our relationship to this crime. If it did not provide guilt in a character, the AI risked turning the user off. Yet, I thought of great films like ‘Silence of the Lambs’ and ‘Monster’ where the idea of guilt and morality were abstracted. That delicate obfuscation of guilt in the lead characters is what made these film’s so memorable.)

At this point we needed to add more character layers – like ‘Mohanty was a bank robber’, John was a priest.

Rachel was driving down the street when she suddenly saw someone in the road. She slammed on the brakes but it was too late. She hit the person and heard a loud thud. She got out of the car to see what had happened, and saw she had hit a man. She started to panic and tried to run but her husband John caught up with her and brought her back to the scene of the accident.

How did Rachel become so selfish ? It is like she is running from her past, as well as this accident, and her long suffering husband John is about to be betrayed. We do not know this Rachel character at all. The old adage is true: put the character into a crisis to see who they really are.

I liked this small twist the AI produced of Rachel escaping the scene and trying to abandon her husband in the process. It reminded me to think of the audience, at least a couple of times in your draft stage, by taking something the viewer might take for granted and pull the rug out from under their feet. You think they are a trusting married couple? Think again.

Taking another route, Filson entered her parameters for a story she is working on: Maya is an ambitious aspiring writer who must navigate Swedish culture, Somali culture in a Swedish society.

The model came up with:

Maya is a Somali-Swede who is trying to make it as a writer. She often feels like she’s stuck between two cultures, and it can be difficult to find her place in Swedish society. However, she is determined to succeed and uses her writing to explore her dual identity. Maya is also a member of a book club that meets every week to discuss different books. At their last meeting they discussed a novel about a young Somali woman who moves to Sweden and struggles to find her identity. Maya was excited to share her own experiences and to hear the perspectives of her fellow book club members.

We recognized the need to add some drama. The prompt itself was tweaked: she’s about to catch the train for her work just like everyday but today things won’t happen as planned:

The model said:

Maya is on her way to work as usual when she notices something different. The train was crowded and she couldn’t find a seat. She started to feel uneasy, especially when she saw a group of teenagers cornering an old man. The situation escalated quickly before she knew it.

Not quite there. We realized the story needed more context to catch our imagination. Place, location, was important and in this case the city could behave like a characthrer, like in Sex in the City.

So we changed the prompt to ‘she begins her day like everyday at her home in Brooklyn where she is waiting for the subway, but this day is going to be very different.

The small tweak resulted in much more interesting results.

“The subway appears and the doors open and Maya is pushed forward by the crowds. She tried to stay calm, but when the train doors closed, she felt a sharp pain in her back. She had been robbed. Maya is devastated because she saved up her money for her classes”

Suddenly the scenario came to life as the mundane trip to work turned into a life and death situation. Most of us in the working group liked this turn of events and we discussed adding a prompt where perhaps the pain in her back was because she was stabbed – and the next prompt could be how she suddenly saw blood.

But Filson did not want violence to be part of the story. Filson’s intentions were to tell her story in a comedy/dramedy space and this was not taking her there. We added the genre within the prompt itself, asking the model to adopt a dramedy-esque tone:

Maya is an ambitious aspiring writer who naviages between Swedish and Somali cultures in a Swedish society.

She begins her day like every day at her home in Brooklyn where she is waiting for the subway, but this day is going to be very different. Maya’s roommate Hanna wakes her up and insists that she take the subway to a job interview that Maya had completely forgotten about.

Maya arrives at the interview flustered and sweaty, only to realize it is not an interview but a Swedish quiz show. She is embarrassed and frustrated but decides to participate in the quiz anyway. Through her knowledge of Somali culture and her perseverance, Maya manages to win the quiz show and gain the admiration of the audience.

Filson responded well to this output because it aligns with a goal that many writers of color aspire to: avoid stereotypical characters in our dramatic scenarios. The game show scenario the AI conjured here felt relatable more importantly, I wanted to know what happened next.

I began to wonder how these models worked and learned that they will often operate by scanning the internet for specific information. It turns out that scraping a lot of text from the web is a good, cheap and scalable way to create *large* amounts of learning material for the model. So researchers independently scrape data from the web, for example, and then another group of researchers when training their AI models just make their models learn from that scraped data. That word relationship pattern I talked about earlier? That is key because AI locates these patterns in constructions that are common in language, and therefore common in culture. At some point when the relationships between words are too low the model goes off track. If you ask it to think about a character or storyline like Filson’s, maybe the model can not find enough culturally specific relationships between the kinds of characters and words Filson wants to work with. I sensed the model was falling back on a twist to a ‘Slumdog Millionaire’ scenario.

It became clear that this question of where the model gets its information from has to be considered more deeply when we get to the point of making our screenwriting algorithm. Writing by women, and from people outside the Western system of screenwriting, will be necessary to create a fairer engine. On a creative level this was key. To avoid repeating the patterns of the past, we had to be mindful to include a kind of internationalism, or at least a broader scope of the inputs to the AI.

Stay tuned for part II